Evidence-Based Medicine (EBM) is about offering support to make the right decision in choosing the best treatment for our patients. Since it first appeared in top medical journals in 19921 , EBM has revolutionized medical practice and infiltrated other disciplines (such as dentistry) along the way as a tool to support the decision-making process in the provision of medical care.

The British Medical Journal concluded in 2007 that EBM represents one of the most important medical milestones of the last 160 years, which is quite an accolade when one considers all the other medical advances that were made during that timeframe.2

But what exactly is EBM and how can it help inform clinical practice?

Evidence-based medicine aims to apply the best available evidence gained from the scientific method to medical decision making.3 Evidence-based medicine was defined as “the process of systematically finding, appraising and using contemporaneous research findings as the basis for clinical decisions” in one of the earliest papers on the subject.1

One of the most well known actors in the field is David Sackett who has written extensively on the subject and is credited with coining the phrase evidence-based medicine. In a paper from the mid-1990s4 , he and his coauthors offered the following definition, “Evidence based medicine is the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients.”

Sackett et al4 have previously written that “the practice of evidence based medicine means integrating individual clinical expertise with the best available external clinical evidence from systematic research.” The learning curves associated with performing different types of surgery6 mean that senior surgeons tend to have better outcomes.7

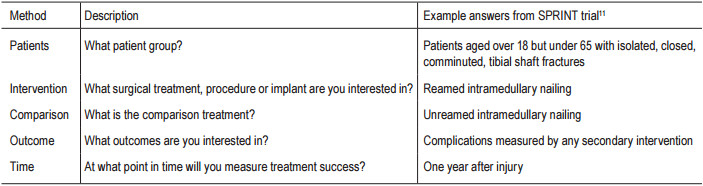

Table 1: Example for five main factor question

Sackett et al5 summarized the key steps of practicing EBM as follows:

Similar versions of these essential steps of EBM practice can be found throughout the EBM literature.8-10

There are five main factors to consider when generating the clinical question. A simple way to help you frame it is to use the acronym PICOT (Patients, Intervention, Comparison, Outcome, and Time). The PICOT grid for therapeutic studies is given below, along with some example answers from the published SPRINT trial.11 (Table 1)

Selecting the most important terms from your PICOT answers will give you the search words you will need for the next stage of practicing EBM.

One of the most popular information sources is PubMed, which is free to search on. It can be directly accessed at: www.pubmed.gov. This comprehensive database of the life sciences with a concentration on biomedicine also has some full text papers available for free.

PubMed is a powerful tool with many options. To help you find the published medical literature you require, please visit the series of brief animated tutorials with audio to learn more. Click on the ‘PubMed Tutorials’ link on the PubMed home page or go directly to: www.nlm.nih.gov/bsd/disted/pubmed.html

You can also find systematic reviews at The Cochrane Library: www.thecochranelibrary.com. Another popular service for biomedical records is Embase: www.embase. com - a subscription is required for this service. Don’t forget the resource that is your colleagues too!

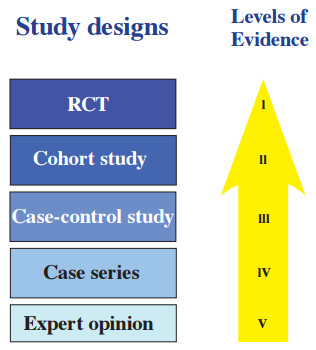

Levels of evidence are a method of arranging studies into a hierarchy based upon the quality of the evidence they produce as a result of their study designs ( Figure 1).

Levels of evidence provide a concise and simple appraisal of study quality. The essence of levels of evidence is that, in general, cohort studies where are there are 2 groups to compare are better than single arm studies, prospective studies are better than retrospective studies, and randomized studies are better than nonrandomized studies.

Levels of evidence should be used with caution as they only provide a rough guide to study quality and should not preclude a complete critical appraisal. In addition, Level I evidence may not be available for all clinical situations, in which case lower levels of evidence can still be valuable. An answer to a clinical question is found by analyzing all evidence of all grades. A single study does not provide a definitive answer.12

Although not shown in the diagram, the tip of the evidence iceberg is a meta-analysis. Examples of these can be found at www.cochranelibrary.com.

Figure 1: Study designs and levels of evidence

A meta-analysis is a systematic review of several Randomized Controlled Trials on the same subject but with more statistical power. What does this mean? If a sample size is too small, a study may not be able to detect differences in treatment effectiveness even if one treatment is truly superior to another. The ability to detect these differences is the statistical power of the study, which depends on the characteristics of the studied variables and on the sample size. Since we cannot change the characteristics of the variables we are studying, the only way to influence the power is to change the sample size. A larger sample size will bring more power to the study, and by combining several studies into a meta-analysis we can effectively achieve this.13

Although rising, less than 5% of trials in the orthopedic literature are RCTs.7 However, although they provide the highest level of evidence, an RCT is not always what is needed in orthopedics to answer a specific clinical question.5, 14, 15 Glasziou et al describe some historical examples of treatments whose effects enjoyed wide acceptance on the basis of evidence drawn from case series or non-randomized cohorts (for example, ether for anesthesia).16

Assigning levels of evidence to studies published in the literature is also something relatively recent in orthopedics. The Journal of Bone and Joint Surgery, American Volume began running a quarterly “EvidenceBased Orthopaedics” section in 2000, which provided information on randomized trials published in a large number of other journals. Rating studies published in the journal with a level of evidence began in 2003. The Journal of Bone and Joint Surgery, American Volume, uses a model of five levels for each of four distinct study types (therapeutic, prognostic, diagnostic, and economic or decision modeling).15

The Journal of Orthopaedic Trauma introduced level of evidence rating for all therapeutic, prognostic, diagnostic and economic studies in March 2012.17 In an acknowledgement of the established role that EBM now plays in orthopedics and trauma, the authors noted, “the widespread use of the levels of evidence rating system in other orthopaedic journals and subspecialty meetings.”

However, despite the special challenges that EBM in orthopedics poses, there are ways to surmount these problems. While we obviously cannot blind the surgeon as to the treatment choice, patient and independent outcomes assessors can be blinded, a good example of how we can be creative in adapting standard research principles to suit the peculiarities of orthopedics.18 Other proposed solutions include evaluating the learning curve using appropriate statistical techniques and a more precise definition of intervention to reduce the variations on operations that occur and impact upon surgical outcomes.6

Misclassification of fracture types often leads to a bias. Therefore, please be sure to use a validated fracture classification such as the Müller / AO Classification of Long Bones.

Critical appraisal is an integral part of Evidence Based Medicine. It should be done to try to identify methodological strengths and weaknesses in the literature. Therefore, evidence should be appraised for validity, importance and applicability to the clinical scenario.19 Suitably critiqued, it allows the reader the opportunity to make an informed decision about the quality of the research evidence presented.

Critical appraisal checklists, which are a great help when interpreting scientific manuscripts, are available from the Centre for Evidence Based Medicine. Go to http:// www.cebm.net/index.aspx?o=1913 for more information. The International Centre for Allied Health Evidence (iCAHE) also has a wide range of literature appraisal checklists from case studies to randomized controlled trials to download for free. Available at: www.unisa.edu. au/cahe/resources/cat/default.asp.

Having found the evidence and critically appraised the validity of the results, the most important question is whether the results are applicable to your patient. The benefits and limitations of applying the therapy should also be assessed.7

Sackett et al examine this issue across the range of clinically important needs.5 For example, is a diagnostic test available at your hospital? Were the study patients in a prognostic study similar in profile to your patient? Are the patient’s preferences satisfied by this particular treatment? It is also necessary to consider the level of patient compliance you can expect with a treatment regimen.

We hope that this article has piqued your interest in the world of Evidence-Based Medicine. However, as you learn and apply these and other EBM concepts, please always keep in mind that EBM can only ever inform your decision, it cannot make the decision for you.